About Me

I was a research scientist at Apple, leading an applied research team with a focus on neural rendering and generative AI. I did my Ph.D. at MIT and my undergrad at HKUST.

Shipped Products

A number of products that I contributed to:

- Depth estimation on ARKit

- Depth estimation on Vision Pro

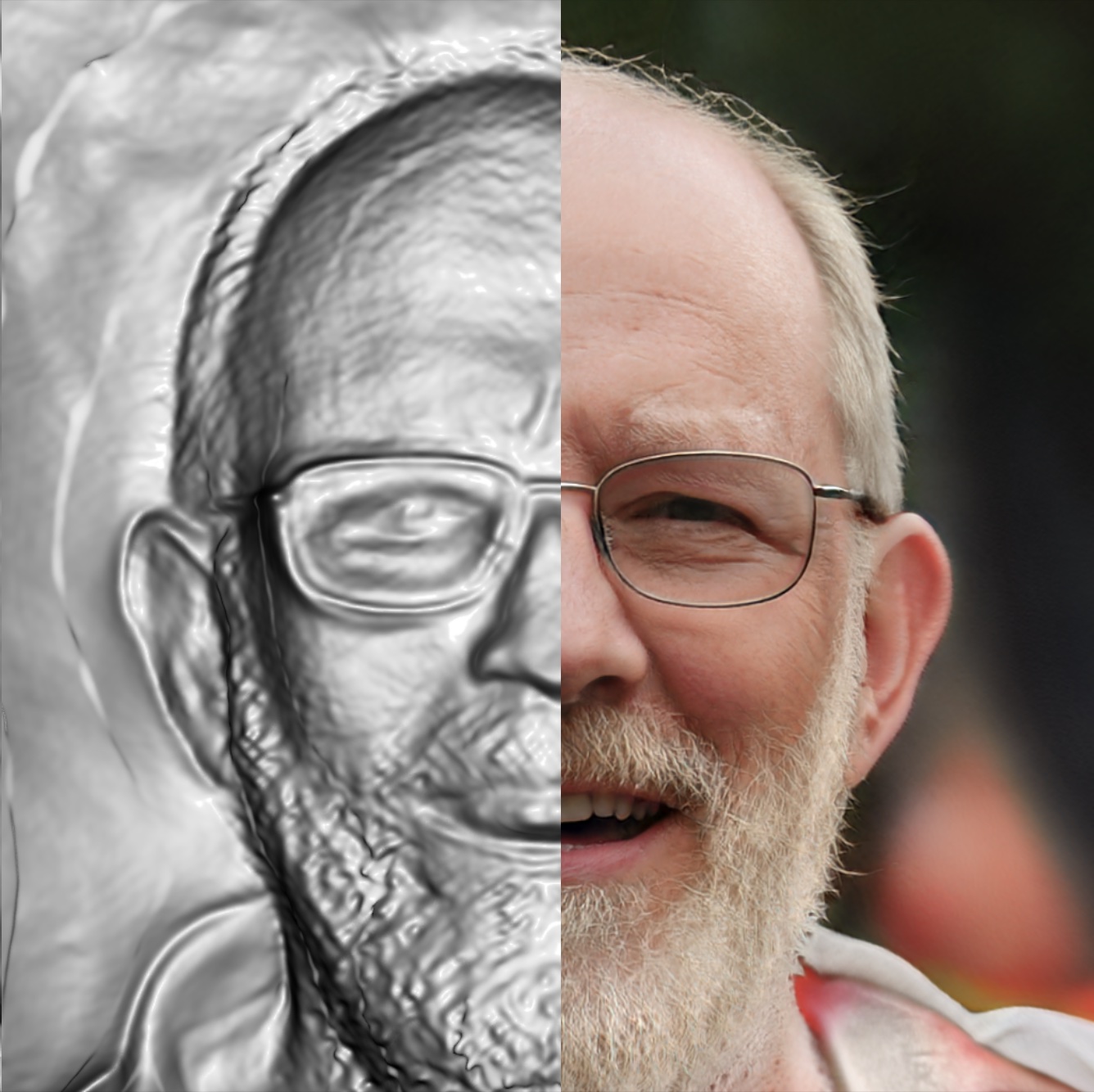

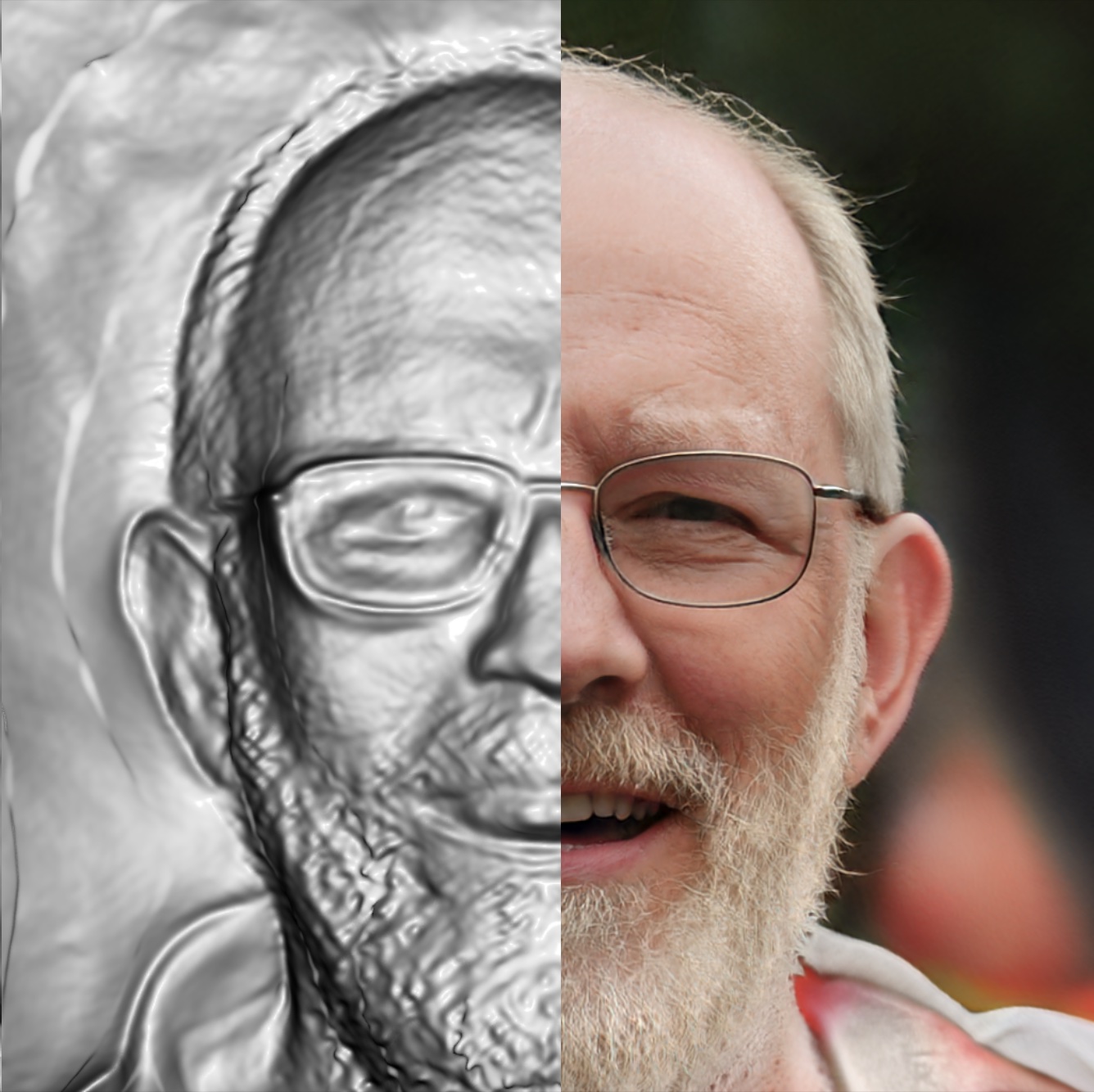

- Face reconstruction for Persona (3D Facetime on Vision Pro)

- 24MP imaging on iPhone Pro

Research Highlights

I’m broadly interested in computer vision, machine learning, and computer graphics. Much of my research is about neural rendering, 3D reconstruction, and generative models. A complete list of my publication can be found on my Google Scholar.

| HyperDiffusion: Generating Implicit Neural Fields with Weight-Space Diffusion

Ziya Erkoç, Fangchang Ma, Qi Shan, Matthias Nießner, Angela Dai

ICCV, 2023

[arXiv] [webpage] |

| Generative Multiplane Images: Making a 2D GAN 3D-Aware

Xiaoming Zhao, Fangchang Ma, David Güera, Zhile Ren, Alexander Schwing, Alex Colburn

ECCV, 2022 (Oral Presentation)

[arXiv] [code] [webpage] |

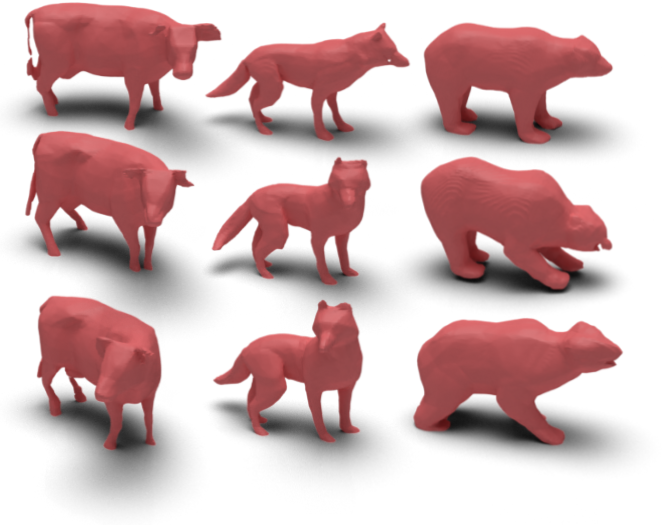

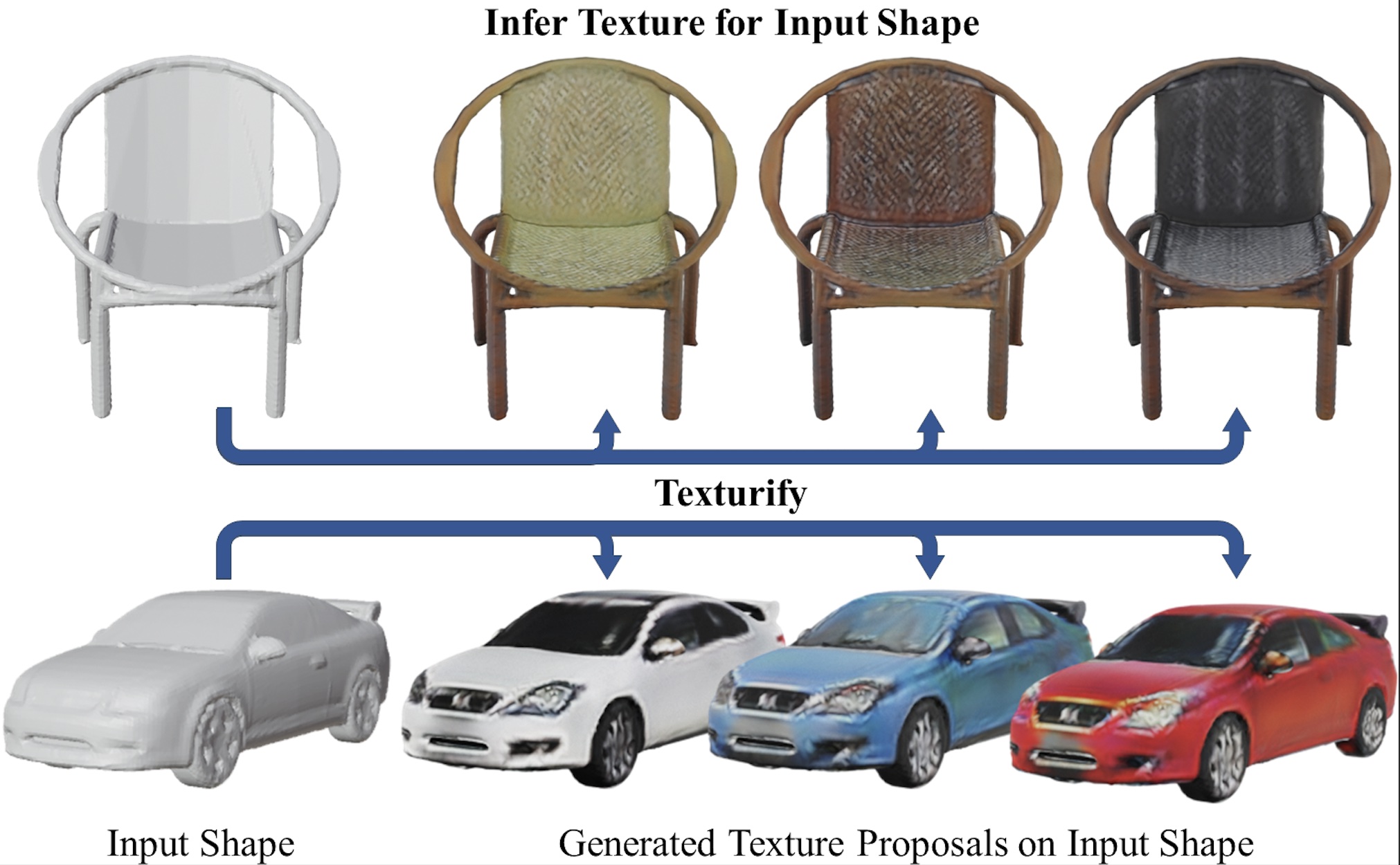

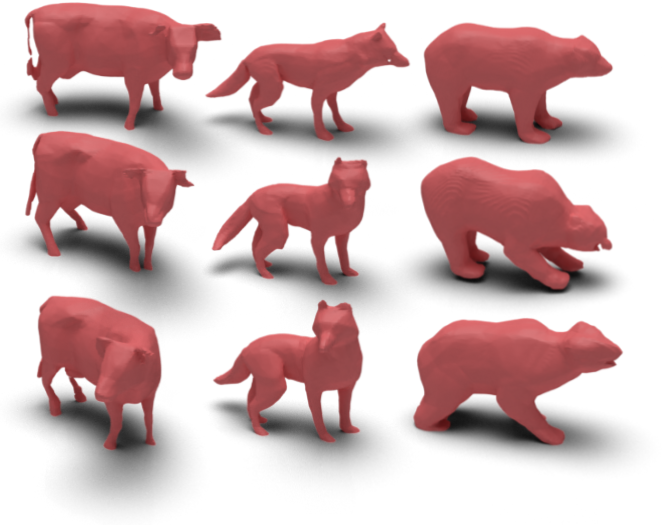

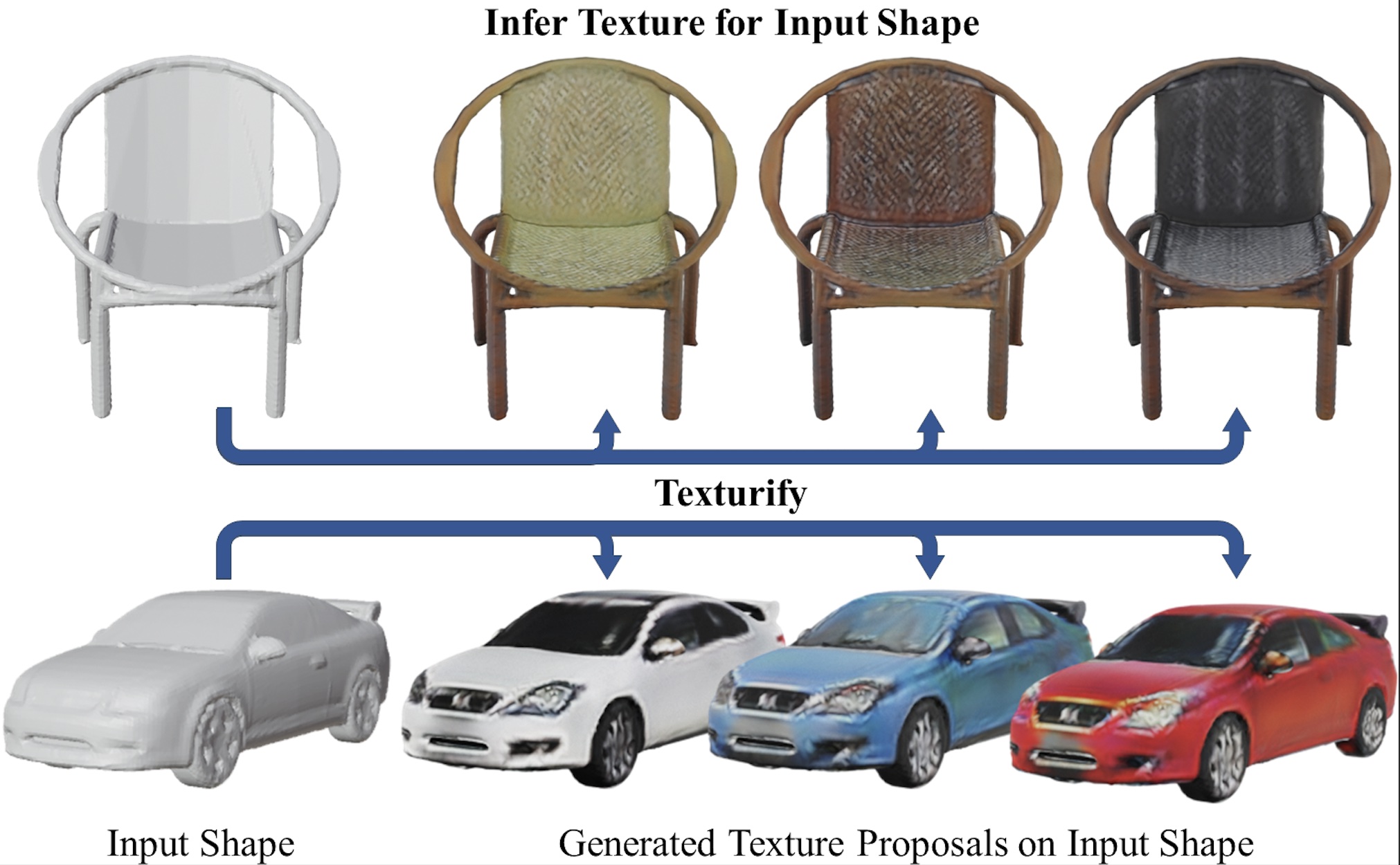

| Texturify: Generating Textures on 3D Shape Surfaces

Yawar Siddiqui, Justus Thies, Fangchang Ma, Qi Shan, Matthias Nießner, Angela Dai

ECCV, 2022

[arXiv] [video] [webpage] |

| Self-supervised Sparse-to-Dense: Self-supervised Depth Completion from LiDAR and Monocular Camera

Fangchang Ma, Guilherme Venturelli Cavalheiro, Sertac Karaman

ICRA, 2019

[arXiv] [code] [video] |

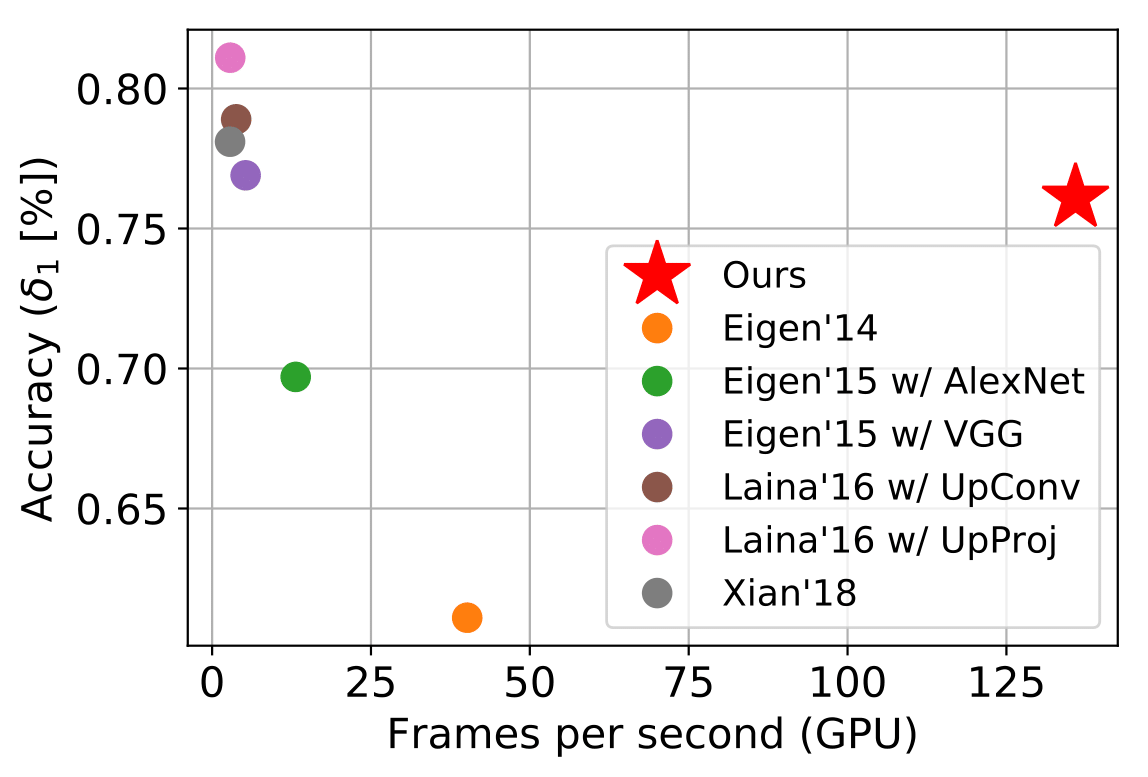

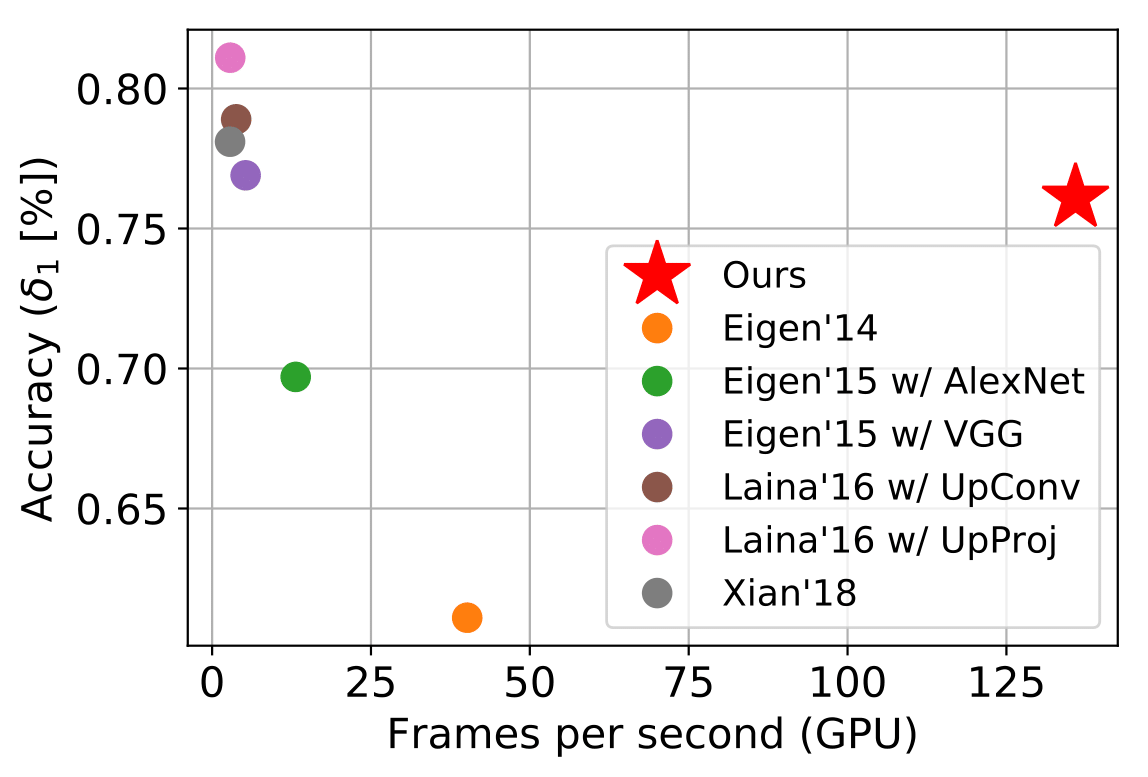

| FastDepth: Fast Monocular Depth Estimation on Embedded Systems

Diana Wofk*, Fangchang Ma*, Tien-Ju Yang, Sertac Karaman, Vivienne Sze

ICRA, 2019

[arXiv] [code] [video] [webpage] |

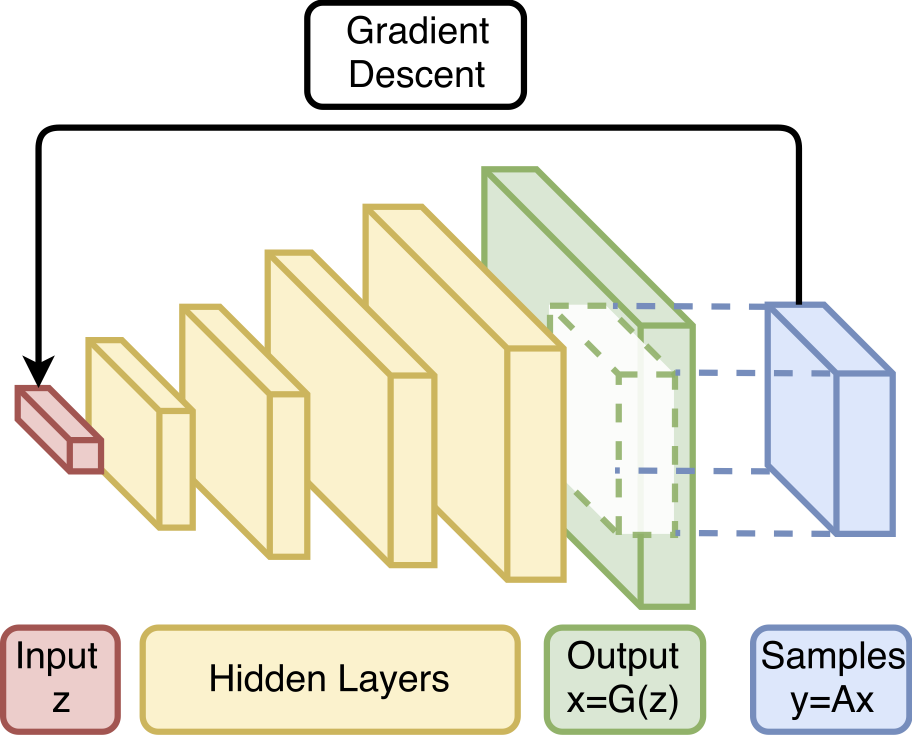

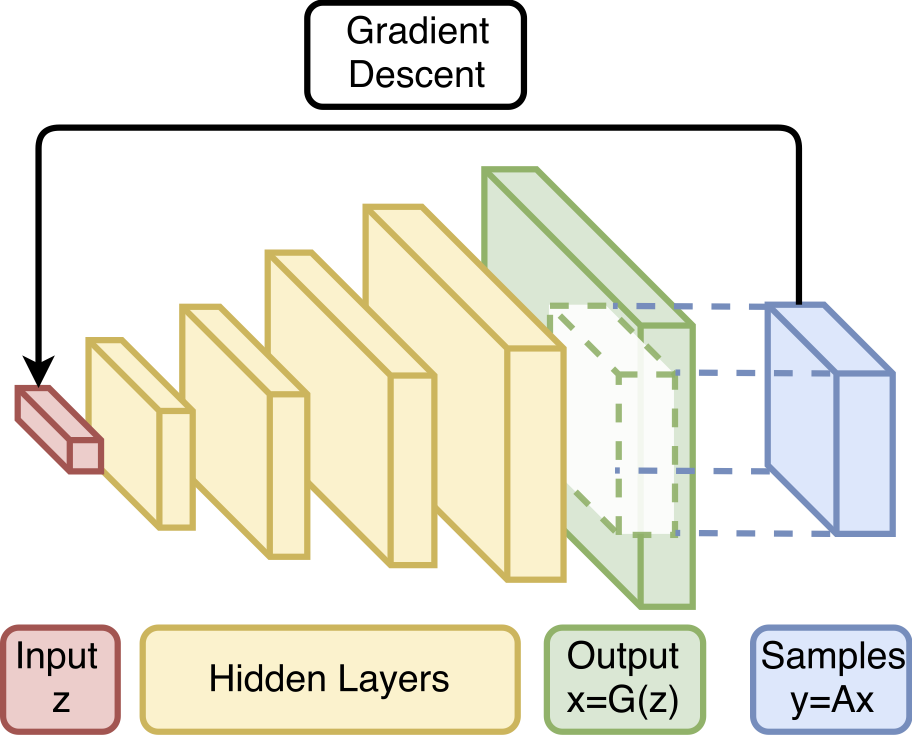

| Invertibility of Convolutional Generative Networks from Partial Measurements

Fangchang Ma, Ulas Ayaz, Sertac Karaman

NeurIPS, 2018

[pdf] [supplementary] [code] |

| Sparse-to-Dense: Depth Prediction from Sparse Depth Samples and a Single Image

Fangchang Ma, Sertac Karaman

ICRA, 2018

[arXiv] [video] [pytorch code] [torch code] |